5G Digital Twin

Project Description

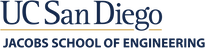

This project is dedicated to providing a robust platform for developing and validating

cutting-edge solutions within the 5G cellular network domain, including the multi-source fusion

of data in C-V2X networks and the application of Reinforcement Learning (RL) algorithms for

dynamic optimization. These solutions are tailored to adaptively respond to fluctuating Quality

of Service (QoS) demands and resource needs. At the heart of this initiative is the construction

of a digital twin of the 5G network, which meticulously replicates the Verizon 5G lab

environment. This simulated framework not only facilitates rigorous analysis but also ensures

that all architectural and operational characteristics are precisely aligned with those of the

actual lab environment, thereby providing a foundation for detailed data analysis and

performance benchmarking. Through this platform, the project aims to drive the effective

development and validation of AI/ML solutions, ensuring these technologies can significantly

contribute to the sophistication and adaptability of 5G network strategies.

Verizon Lab Digital Twin Testbed

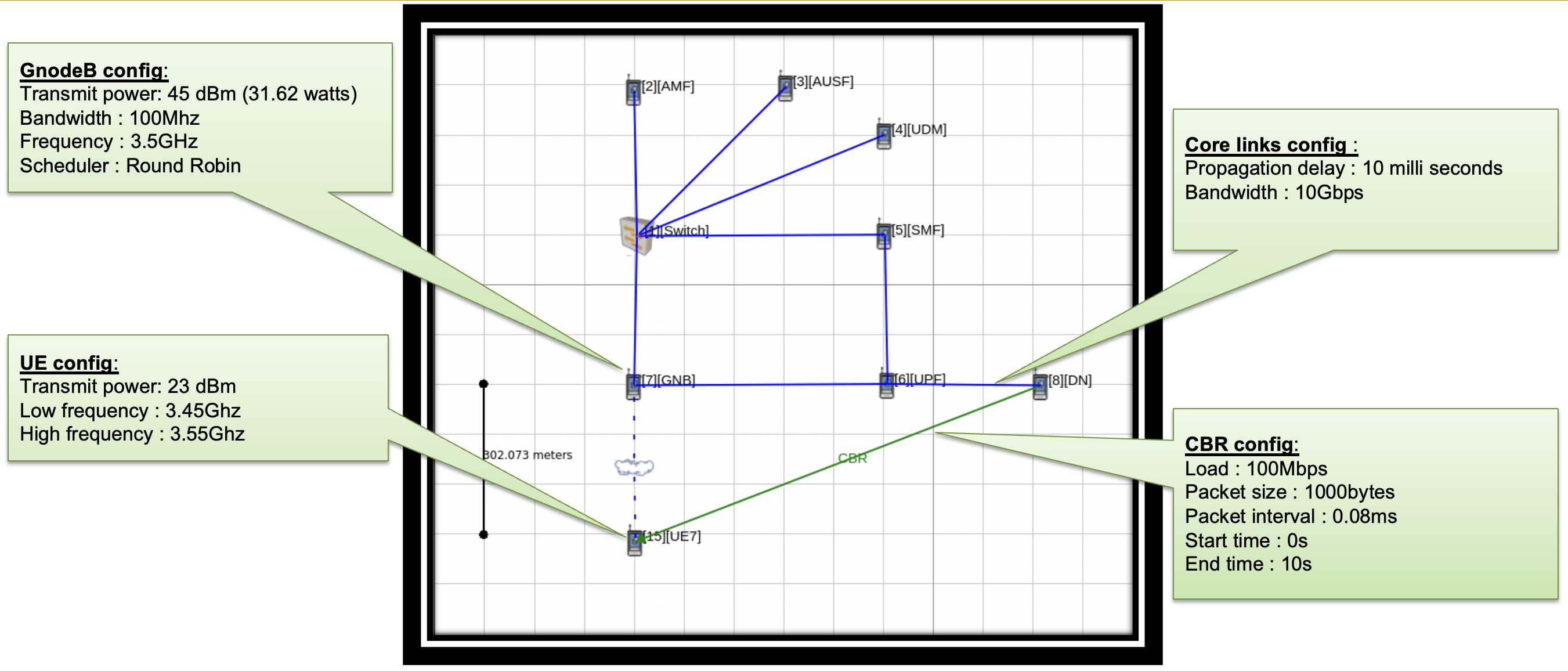

In partnership with Keysight Technologies, and Verizon, this initiative utilizes the Exata platform

to develop highly accurate digital twins of existing 5G networks. The network architecture in the

digital twin encompasses elements such as the AMF, AUSF, SMF, UPF, and DN, integrated with a

RAN network that includes a GnodeB and two UEs. This setup is crucial for simulating realistic

wireless channel models and traffic patterns, which are essential for the subsequent data

analysis phase.

Data Analysis to Identify Real-to-Simulation Gaps

Our ongoing analysis of 5G network performance under various traffic conditions (CBR and FTP)

is essential for tuning our key performance indicators—latency, throughput, packet loss, jitter,

and buffering rate. Through time series and histogram analyses, we're pinpointing trends and

understanding how different loads and UE numbers affect network performance. Initial trends

indicate a significant inverse correlation between latency and throughput, with increased traffic

exacerbating jitter and packet loss. These insights from statistical testing are crucial for guiding

our network optimization strategies. As we progress, we aim to minimize the discrepancies

between our digital twin simulations and real Verizon 5G lab data. This gap analysis is not only

pivotal for refining our simulation models but also sets the foundation for developing and

implementing an AI/ML algorithm. By understanding these gaps, we can better predict how

AI/ML solutions might differentially impact network performance in simulated versus real

settings, thereby enhancing the accuracy and efficacy of our AI-driven optimization strategies.